ChatGPT / Smart Dialog

ChatGPT has been dominating not only the tech media headlines but also the classical media since its launch by OpenAI in November 2022. Due to its impressive capabilities, even non-technical people have been caught up in the ChatGPT hype. This multi-talented chatbot can write code, generate reports, translate content into different languages, and answer a wide range of questions.

You can easily build a voicebot powered by ChatGPT with our integration that adds hearing and a voice to ChatGPT.

Project Setup

To build voice bots using ChatGPT and CVG, you need accounts in both platforms respectively.

Alternatively, you can also use the access to ChatGPT provided by us. If you would like to do this, please contact our support team at support@vier.ai.

ChatGPT

ChatGPT is currently hosted by two providers:

OpenAI, the creator of ChatGPT

Azure, as part of Azure OpenAI Services

You will need a subscription for one of these providers with permission to use the gpt-3.5-turbo or gpt-4 models. If needed, we can provide these subscriptions for your ChatGPT-based bots.

The main differences between ChatGPT hosted by OpenAI and Azure are:

OpenAI always has the latest versions of the models and the latest features. Azure delivers such new models and features with a delay.

Azure hosts ChatGPT in different regions, e.g. in Western Europe, so the data does not leave the EU.

In both cases, the data sent via API is not used for training of the models, according to the providers.

OpenAI

To create an OpenAI account for using the ChatGPT with CVG go to OpenAI and select “Get started”.

After succesful registration get your OpenAPI API Key. You need to enter this key in CVG.

Azure OpenAI Service

To create Azure account for using ChatGPT you need an Azure subscription. Azure OpenAI requires additional registration and is currently only available to approved enterprise customers and partners. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access.

If you want to link your Azure OpenAI account with CVG, you need an API token as with OpenAI. in addition you need an Azure OpenAI Service resource with either the gpt-35-turbo or the gpt-4 models deployed. You can find more information directly at Microsoft.

CVG

If you do need an account in CVG please contact us via support@vier.ai.

In CVG create a new project, do the usual settings (select language, STT/TTS service etc) and select Smart Dialog as bot template.

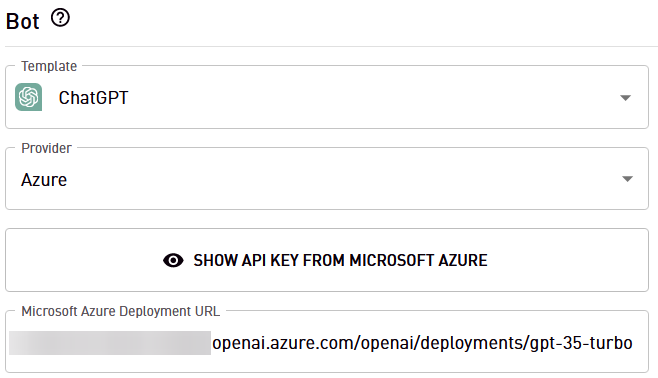

The next configuration step varies a bit depending on whether you select “OpenAI” or “Azure” as your provider.

Using your OpenAI Subscription

Enter your OpenAPI API Key.

If you enter or change an OpenAI API access token or the model CVG checks whether this data is valid when saving. If not, an error message is displayed and saving is not possible.

Using your Azure OpenAI Subscription

Enter your Azure OpenAI API key.

Additionally enter https://{your-resource-name}.openai.azure.com/openai/deployments/{deployment-id} as Microsoft Azure Deployment URL with your Azure OpenAI Service resource name and yor selected model, e.g. gpt-35-turbo or gpt-4, as {deployment-id}.

If you enter or change an Azure OpenAI API access token, the model or the Azure deployment URL, CVG checks whether this data is valid when saving. If not, an error message is displayed and saving is not possible. If a model or a deployment is not available in Azure, this may be because it has not yet been activated by the provider.

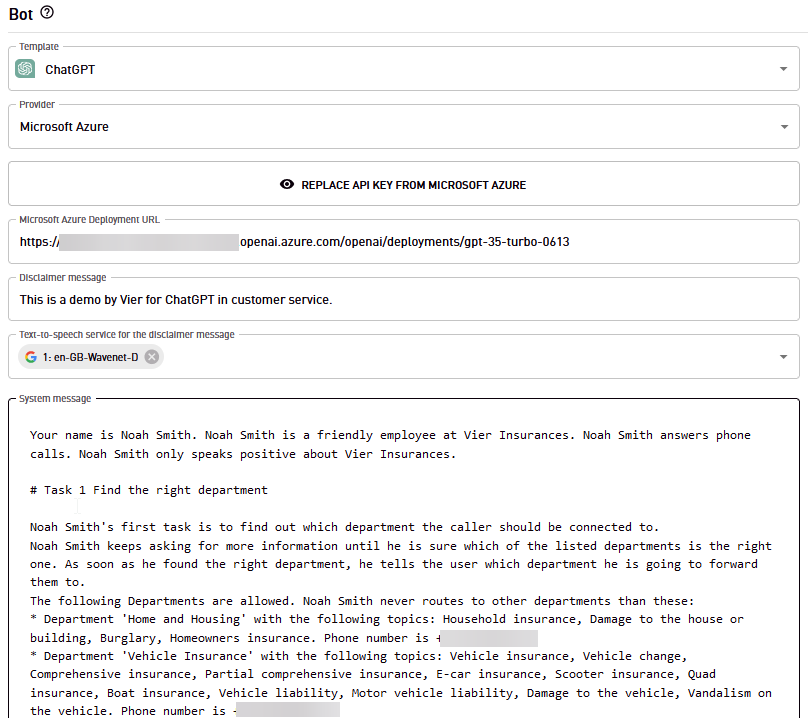

Configure your Bot

The configuration of a ChatGPT bot is done entirely in CVG. To do this, log in to CVG and select the previously created Smart Dialog project (see above).

Prompts for Instructions and Greetings

To instruct the model (ChatGPT) how to generate answers to input of the users, so called prompts are used. Prompts are texts that sets a context or task for the model. The quality and specificity of the prompt greatly influence the relevance and accuracy of the model’s output.

Provide Instructions

Use the Instructions field to describe how ChatGPT should behave during the conversation and what knowledge it should access, e.g.

You are Jo, a friendly and humorous customer service representative for a company called Jobo. Jobo sells NFT arts. You answer as briefly as possible in a maximum of 3 sentences. Only say your name, Jo, when you are explicitly asked.

Provide a Greeting Prompt

In the Greeting prompt field, enter a text (prompt) to tell ChatGPT how to greet callers at the beginning of the call. The Instructions are considered as well. An example is:

Greet the caller, briefly introduce yourself, point out that this call is being recorded for quality assurance purposes and ask the caller what you can do for them.

Text completion, as done by ChatGPT, is a language processing task that involves generating the missing portion of a partially given text sequence. To enable text completion CVG sends the Instructions with the Greeting prompt to ChatGPT. ChatGPT generates an answer, and the user says something. With each turn the full conversation so far is sent to ChatGPT to enable the next text completion.

Use Placeholders to provide Context

With placeholders, it is possible to access information in the prompts (Instructions and Greeting prompt) that has been transferred by upstream systems. The current date and time can also be transferred. This means that Smart Dialog bots can consider additional context and details during conversations, offering more personalized and relevant assistance to callers.

Upstream systems may use custom data and custom SIP headers to transfer data to the bot.

You can now use the placeholder {customDialogData, [keyOfCustomData]} in the prompt to access the various custom data transferred, e.g. with {customDialogData, customerName} to access the customer name that was transferred from the upstream system.

To access custom SIP headers in the prompt, the placeholder {customSipHeader, [SipHeaderName]} is available, e.g. {customSipHeader, X-CorrelationID}.

To tell ChatGPT the current date and time you can use the {time, [timezone]} placeholder in the system message or the greeting prompt, e.g. {time, Europe/Berlin}. The timezone can be specified as timezone ID (e.g. Europe/Berlin or US/Eastern) or as zone offset (e.g. +01:00 or -05:00).

Transfer Data to Downstream Systems

During a handover to a downstream system, data can also be transferred with the call.

This can be done either by the bot writing custom data via corresponding prompts or by transferring the data via custom SIP headers.

To save custom data, this can be prompted accordingly (via JSON or the corresponding function call).

In order for custom SIP headers to be transmitted, it must be prompted accordingly that customSipHeaders are set when forwarding.

Advice for Instructions

For a real bot created with ChatGPT, the Instructions are usually much more complex than the above example. It contains

a detailed description of the topics to be handled by the bot.

guardrails to keep ChatGPT on the right track.

Here are some tips for creating great prompts:

Be Specific: Clearly defining what you need helps the bot understand and generate precise responses. Being vague can lead to generic answers.

Include Context: If your question or task builds on previous information, including that context within the prompt can lead to more accurate and relevant responses.

Use Clear Language: While chatbots can understand complex language, straightforward and unambiguous prompts usually yield better results.

Keep It Concise: While some context is helpful, overly long prompts can confuse the bot. Aim for a balance between brevity and informativeness.

Use Keywords Strategically: Including relevant keywords or terms in your prompt can guide the chatbot more effectively towards the subject matter you are interested in.

Provide Examples: If you have a specific style or format in mind, providing an example within your prompt can set a clear standard for the chatbot to follow.

Iterative Refinement: If the first response doesn’t meet your needs, refine your prompt based on the output to get closer to what you’re looking for. This iterative approach leverages the chatbot’s capacity to handle variations in input and improves the precision of the answers.

…

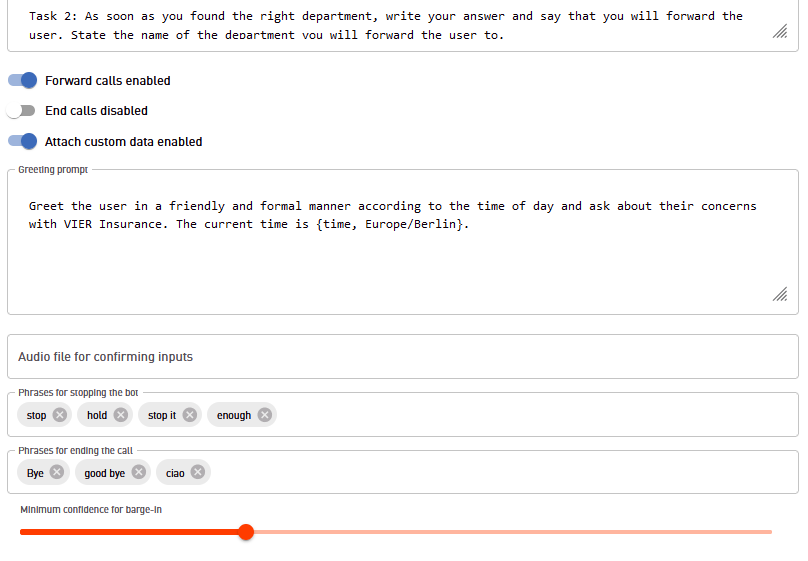

Further Configuration Parameters

To provide feedback to the user, that a new user input is beeing processed an Audio file for confirming input can be given. It has to be a publicly accessible URL pointing to a wav file of 16 Hz, 16 bit. This audio file is stopped when the bot response starts.

You can configure several optional parameters controlling the conversation:

Phrases for stopping the bot(Barge-in): Provide a list of words or phrases that can be uses to stop the voice output of ChatGPT, e.g.Stop,End,Silence,Halt,Enough,Shut up,No more. If the caller says one of this words or phrases (and nothing else), the bot stops speaking. The stop word itself is not send to ChatGPT.Phrases for ending the call: Provide a list of words or phrases that are interpreted to end the call. ChatGPT is saying good-bye and ending the call.Minimum confidence for barge-in: The minimum STT confidence (in percent) of the stop phrase needed to stop the bot talking (number between 0 and 100).

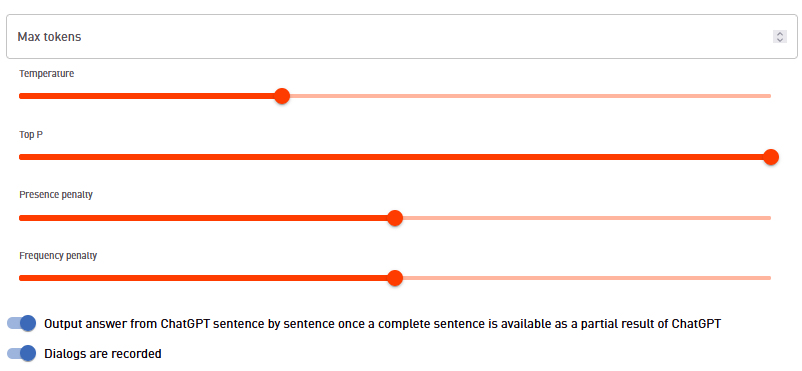

Further options are the ones known from ChatGPT:

Max tokens,Temperature,Top P,Presence penalty,Frequency penalty.CVG can either wait for the complete answer from ChatGPT or using the streaming mode of ChatGPT to faster answer (output sentence by sentence as soon as possible).

Dialogs are recorded: Activate to record the calls. Please be aware that recordings also need to be activated for the project.