CVG 1.41.0 (10-July-2024)

We’re exhilarated to unveil the Midsummer Release of CVG, a milestone packed with groundbreaking enhancements that elevate one of our core functionalities: speech recognition and synthesis, harnessing the best-in-class tools available today. This release sees the integration of new cutting-edge services from ElevenLabs and Deepgram for both Text-to-Speech (TTS) and Speech-to-Text (STT), delivering a transformative experience for your voicebot users.

But that’s not all—we’ve also refined the integration of existing TTS and STT services. Now you can enjoy the lifelike quality of Amazon Polly’s neural voices, effortlessly adjust voice speed within the Speech Service Profile, and utilize Google’s advanced STT Telephony Model where supported.

In addition to these major upgrades, we’ve introduced background audio playback, enriching the user experience to an immersive level. Adding more to the excitement, our new built-in Voicebox-to-E-Mail bot functions as a voicebox, live-transcribing user’s speech input and forwarding the transcript via email.

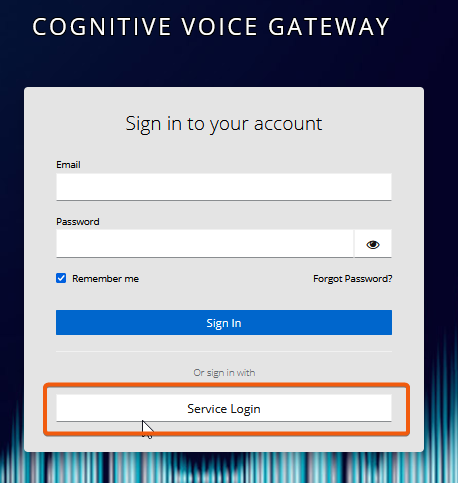

We’re also thrilled to simplify life for our loyal VIER users—now, their existing Service Login Accounts can seamlessly access CVG.

This release is a giant leap forward, designed to provide a more delightful and powerful voice user experience. We can’t wait for you to dive in and explore these exciting new features and enhancements.

Additional and Improved Text-to-Speech Services

ElevenLabs TTS available (US-hosted)

We are excited to announce the integration of ElevenLabs’ state-of-the-art voice synthesis technology into the latest version of CVG. This collaboration enhances our platform by delivering unparalleled, high-quality voice options that cater to diverse needs of your voicebot projects.

All ElevenLabs TTS Services are US-hosted

ElevenLabs services are hosted in the United States. If you wish to use ElevenLabs TTS services from our European data center, you should be aware that TTS text and generated audio announcements are processed and stored in the United States.

Key Highlights

Wide Range of Voices: Choose from an extensive library of natural-sounding voices, encompassing different accents, genders, and age groups to align perfectly with your project requirements.

High Fidelity Output: ElevenLabs voices are known for their superior clarity and realism, ensuring your voicebots sound professional and engaging.

Multilingual Support: Generate high-quality voicebots in multiple languages with the same voice.

Brand Representation: Use your customized ElevenLabs voice to represent your brand consistently across all communications and media, creating a unique and memorable audio identity.

How to Access ElevenLabs Voices

There a three options to use ElevenLabs voices for your voicebots powered by CVG:

Select from the pre-made ElevenLabs Voices provided by us within CVG by default.

For additional options, we can add community-featured voices on request.

If you have an existing ElevenLabs subscription, you can bring your own subscription and integrate your custom voices into CVG.

Use the appropriate Model

When configuring an ElevenLabs TTS profile make sure you select the recommended model for the voice.

We believe this new feature will significantly elevate the quality of your voicebots. As always, we look forward to your feedback and are committed to continuous improvement.

Use of AWS Polly neural voices when available

Your voicebot now uses Amazon Polly’s neural text-to-speech voice when a neural model is available for the voice you select. This improvement enables even higher quality voice output compared to the standard voices used previously.

Key highlights:

Neuronal Voices: Amazon Polly neural voices are now available and include 33 languages and language variants.

Automatic selection: When selecting an Amazon Polly voice, the system will now automatically select the neural version, if available, to ensure the best possible audio quality for your applications.

This improvement aims to provide users with a more natural and engaging listening experience through better speech synthesis.

No additional actions are required from you. CVG will be seamlessly updated to use Neural voices where appropriate.

New Speed Configuration for TTS Profiles

If you configure a TTS profile, you can now set the speech output speed there, if supported by the STT provider. This means that the speech speed can be easily adjusted centrally.

Speed configuration is currently available for:

Google TTS

IBM TTS

OpenAI TTS

Additional and Improved Speech-to-Text Services

Deepgram STT available (US-hosted)

We are excited to announce that you now can choose Deepgram as an additional Speech-to-Text (STT) provider. Deepgram’s state-of-the-art STT technology enhances our existing suite of STT providers by delivering accurate and efficient transcription in multiple languages.

Key Features

Low Latency and High Accuracy: Deepgram’s STT is recognized for its low latency and high accuracy, which are crucial for providing an exceptional voicebot experience.

Multi-Language Support: Benefit from accurate transcription services in many languages.

How To Try

We encourage you to try Deepgram STT in your projects to see the difference it can make.

Deepgram STT Services are US-hosted, Other Hosting Options on Request

Deepgram STT services are currently hosted in the United States. This is advantageous for our US customers and enables our European customers to evaluate Deepgram STT. If you wish to use Deepgram STT in your production projects and require the STT to be hosted in the EU, please contact us at support@vier.ai.

Improved Transcription Accuracy with Google STT Telephony Model

Accurate transcription is pivotal for your voicebots, especially when the majority of interactions occur via phone calls.

To address this, we have integrated the telephony model of Google Speech-to-Text (STT). This specialized model has been meticulously trained on audio data from phone calls, enabling it to deliver markedly improved transcription results for similar audio data.

Key Highlights:

Enhanced Accuracy: Expect higher transcription accuracy when voicebot interactions occur over phone calls, thanks to the telephony model’s training on phone call audio data.

Automatic Implementation: Projects utilizing Google STT will automatically switch to the telephony model whenever it is available for the specified language. No additional setup or configuration is required.

Currently the telephony model for Google STT is available for the following languages:

Dutch (Netherlands)

English (Australia)

English (Canada)

English (India)

English (United Kingdom)

English (United States)

French (Canada)

French (France)

German (Germany)

Hindi (India)

Italian (Italy)

Japanese (Japan)

Korean (South Korea)

Portuguese (Brazil)

Portuguese (Portugal)

Spanish (Spain)

Spanish (United States)

We encourage you to provide feedback on your experience with this new feature and look forward to hearing how it enhances your interactions with our voicebots.

Background Audio Capabilities

In the evolving landscape of digital interaction, voicebots have emerged as a pivotal bridge between humans and technology. These intelligent conversational agents streamline tasks, enhance user experiences, and drive efficiency across various domains. One important aspect that has been highly requested by our customers is the integration of background audio capabilities.

Background audio transcends the conventional functionality of voicebots by providing contextual relevance and a more immersive user experience. It creates an environment where information is not just relayed but experienced, making interactions feel more natural and engaging. This auditory context can include soothing music to enhance user comfort, environmental sounds to simulate realistic scenarios, or dynamic audio cues that aid in navigation and comprehension.

Moreover, background audio enhances user engagement by reducing the monotony of conversation-only interfaces and masking potential audio imperfections in real-world environments. This ensures smoother communication and fundamentally enriches the user experience, fostering more intuitive, efficient, and pleasant interactions with voicebots.

Extended Endpoints to Start/Stop Background Audio

We have enhanced our API endpoints to support background audio playback:

Start Playback:

Endpoint:

/call/playNew Parameter:

modeDefault:

FOREGROUNDUse

BACKGROUNDto play audio in the background.

Additional Options:

repeat: Configures looping/repetition of audio playback.volume: Controls the playback volume.

Stop Playback:

Endpoint:

/call/play/stopNew Parameter:

modeDefault:

FOREGROUNDUse

BACKGROUNDto stop background audio.

Cognigy Integration: Extended Play Audio File Node

The “Play Audio File” node in Cognigy will soon include support for background audio, as well as loop and volume control, allowing for more flexibility and customization in voicebot interactions.

Enrich your User Interactions

This enhancement empowers you to create more immersive and engaging voicebot experiences, meeting the needs and preferences of a wide array of applications. We look forward to seeing how this new feature will be utilized to innovate and enrich user interactions.

Built-in Voicebox-to-Mail Bot

The new built-in bot “Voicebox-to-Mail” makes it possible to answer incoming calls on a configurable phone number, transcribe the voice input live and send the transcript to a given e-mail address.

Once a call is answered, the caller hears a customizable announcement followed by a signal tone that instructs them to record their message. The real-time voice transcription is then sent to a predefined email address, with customizable email templates for both subject and body. Optionally the transcription can be carried out by transcription servers operated by VIER on VIER’s own hardware. This ensures a high level of data security and availability.

Configuration

To configure a CVG project as a Voicebox-to-Mail Bot:

Template Selection:

Select “Custom” as the Bot template.

Set “http://mailbox-bot:9090” as the Bot URL.

Custom Configuration:

Input the following configuration details:

{ "greeting": "You are welcome to leave us a message. Please speak after the signal tone.", "signalUri": "https://storage.cognitivevoice.io/vier-public/beep-soft-with-soft-outro-750ms.wav", "email": { "subjectTemplate": "Received call from {remote}", "bodyTemplate": "{remote} has left the following message:\n\n{transcript}\n-- End of message --", "from": "noreply@your.mail-server.com", "destinationField": "recipientEmail", "defaultDestination": "support@vier.ai", "smtp": { "host": "your.mail-server.com", "port": 587, "username": "your-username", "password": "your-password" } }, "maxCallDuration": 180000 }

If you want to attach the recording to the email, add the line “attachmentMode”: “FILE”, to the email configuration.

Call Settings:

Ensure “Trailing transcriptions enabled” is set in the “Call” section of the CVG project configuration. This setting is crucial for including any utterances made just before the caller hangs up, which are delivered by the STT service potentially after the call has ended.

Important Notes

Make sure to configure the SMTP settings correctly to ensure successful email delivery.

The destination email address can also be defined via custom data or via a custom SIP header. The key of the custom data or the custom SIP header can be defined via the "destinationField" parameter.

The maximum call duration can be set in milliseconds, e.g. 180,000 milliseconds (3 minutes).

By leveraging the new Voicebox-to-Mail Bot, you can easily streamline your communication processes, ensuring that no important message is missed. For any assistance or more details about this feature, please contact our support team at support@vier.ai.

Further Improvements

Sending dialogID as SIP header when answering a Call

Various CVG endpoints, such as /dialog/getDialog to access dialog information, require the dialogID as a parameter.

To streamline the process for call-originating systems, the dialogID is now included in the SIP ACK message. When a session is established and a call is answered, the dialogID is transmitted in the SIP header with the label “X-VIER” in response to an INVITE request.

This new feature simplifies the integration process by ensuring that the required dialogID is immediately available to call-originating systems, allowing for smoother and more efficient operations.

Use your existing Service Login User Account

If you already have a user account for VIER Engage or another VIER product, you can now also use it as a user account for CVG. This is an important step towards easier use of all VIER products via one user account.

To log in with your service login user, select “Sign in with Service Login” in the login screen.